Hello, Apache Hudi Community!

Welcome to the March 2025 edition of the Hudi newsletter, brought to you by Onehouse.ai! This month, we bring you another round of project updates, community spotlights, and technical deep dives that continue to shape the future of data lakehouses.

Community Events

March saw a series of impactful events across the Apache Hudi community - bringing together contributors, users, and adopters to share ideas, use cases, and progress.

Hudi Asia Community Meetup

Led by the Kuaishou team, the first-ever Apache Hudi Meetup Asia was held on March 29.

📍 231 in-person attendees

📺 16,673 total views across platforms

The event reflected growing momentum, with strong interest in Hudi’s roadmap and adoption stories.

Community Sync: Southwest Airlines on Data Modernization with Hudi

As part of the March Hudi Community Sync, Koti Darla, Data Engineering Lead at Southwest Airlines, shared their journey of modernizing the airline’s data infrastructure using Apache Hudi. The session offered a behind-the-scenes look into how the team transitioned from legacy systems to a high-performance, real-time data platform. Check out the recording here.

Hudi Developer Sync: RFC Spotlight - Storage-based locking with Conditional Writes

In this month’s Developer Sync, contributor Alex Rhee presented RFC-91, which proposes a storage-native locking mechanism for Apache Hudi using conditional writes on cloud storage systems like S3, GCS, and Azure. This approach eliminates the need for external coordination systems like Zookeeper by introducing a lock provider that leverages atomic write conditions directly in cloud storage. Join the Hudi community for monthly sync here.

Project Updates

GitHub ❤️⭐️ https://github.com/apache/hudi

PR#13017: Users can set a specific number of buckets for different partitions through a rule engine (such as regular expression matching). For certain existing partitions, an off-line command is provided to reorganize the data using insert overwrite (need to stop the data writing of the current partition).

PR#12919: Support bloom filter options in Spark SQL when creating expression index using bloom filter.

PR#13014: Spark Insert Overwrite Support Row Writer

PR#12967: Flink writer for MOR table supports writing RowData to parquet log block: Introduces RowData handle, writing RowData directly into log file without Avro converting.

Community Blogs/Socials

📙Blogs/Videos

Powering Amazon Unit Economics at Scale Using Apache Hudi - Amazon Team

In this blog, Amazon's Profit Intelligence team detailed their development of Nexus, a configuration-driven platform powered by Apache Hudi, designed to scale unit economics across thousands of retail use cases. Nexus manages over 1,200 tables, processes hundreds of billions of rows daily, and handles approximately 1 petabyte of data churn each month.

From Transactional Bottlenecks to Lightning-Fast Analytics - Uptycs Team

Akash Sankritya and the Uptycs team share how they evolved from PostgreSQL bottlenecks and federated Trino queries to a scalable, real-time analytics platform powered by Apache Hudi. By centralizing fragmented datasets and improving join performance, they unlocked faster insights across their growing data landscape.

Data Deduplication Strategies in an Open Lakehouse Architecture - Dipankar & Aditya, Onehouse.ai

This blog breaks down how Apache Hudi tackles data duplication with native deduplication at ingestion, merge, and table service levels. It contrasts this with Delta Lake and Iceberg, which rely on manual MERGE operations and lack built-in constraints - making Hudi a strong choice for those scenarios.

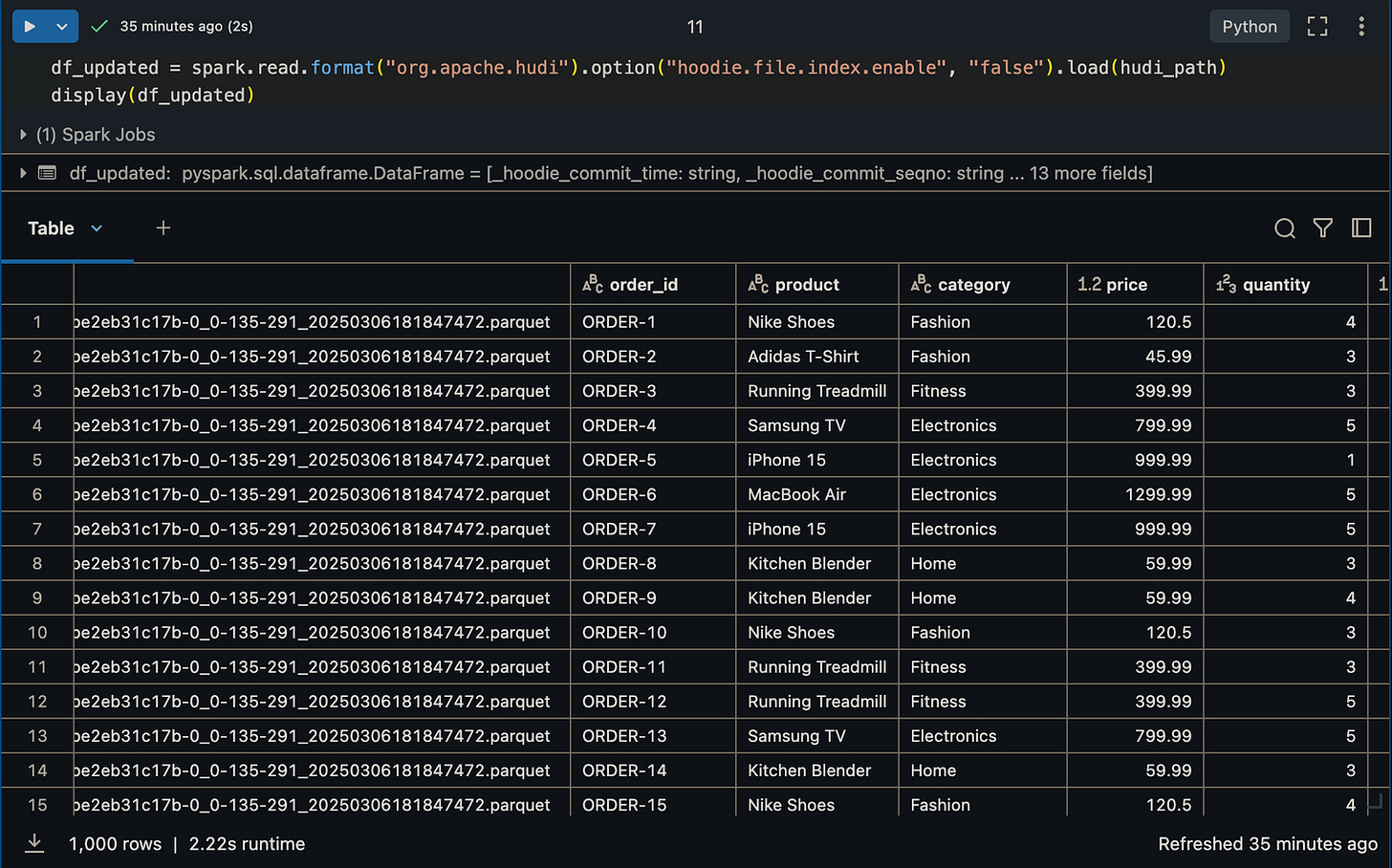

Building an Amazon Sales Analytics Pipeline with Apache Hudi on Databricks - Sameer Shaik

In his article, Sameer Shaik demonstrates how to set up an efficient data pipeline for sales data. He covers configuring Apache Hudi within Databricks environment, generating sample sales data, and performing data ingestion, updates, and real-time analysis.

21 Unique Reasons Why Apache Hudi Should Be Your Next Data Lakehouse - Vinoth Chandar

In this post, Hudi's original creator and PMC Chair, Vinoth Chandar, shares 21 unique differentiators that set Hudi apart from other table formats—spanning advanced indexing, concurrency controls, metadata management, and more

📱Socials

Why Apache Hudi Stands Out in the Lakehouse Landscape

This post by Rahul breaks down Hudi’s edge over Iceberg and Delta, highlighting its strengths in near real-time processing, record-level upserts/deletes, fast writes via MOR, and incremental querying—all built for scale. He shares some real-world adoption stories with organizations like Uber, Alibaba, Tencent, Udemy, etc.

Inside Grab’s Real-Time Data Lake Architecture with Hudi

Subhojit shares how Grab tackled write amplification and update inefficiencies in their lakehouse by adopting a dual-architecture pipeline with Apache Hudi. It emphasizes features like bucket indexing, async compaction, and schema translation that helps balance low-latency writes with optimal read performance.

Distributed ML workloads in Lakehouse with Ray + Apache Hudi.

This post shares how to build distributed ML pipelines using Apache Hudi and Ray, powered by the new hudi-rs integration. By combining Hudi’s incremental processing and time-travel with Ray’s parallel execution, you can run scalable batch inference and training—all in Python.

Hudi Resources

Getting started 🏁

If you are just getting started with Apache Hudi, here are some quick guides to delve into the practical aspects.

Official docs 📗

Join Slack 🤝

Discuss issues, help others & learn from the community. Our Slack channel is a home to 4000+ Hudi users.

Socials 📱

Join our social channels to be in the know about deep technical concepts to tips & tricks and interesting things happening the community.

Twitter/X: https://twitter.com/apachehudi

Weekly Office Hours 💼

Hudi PMC members/committers will hold office hours to help answer questions interactively, on a first-come first-serve basis. This is a great opportunity to bring any doubts.

Interested in Contributing to Hudi?👨🏻💻

Apache Hudi community welcomes contributions from anyone! Here are few ways, you can get involved.

Rest of the Data Ecosystem

Parquet Bloom Filters in DuckDB - Hannes Mühleisen | DuckDB

The Trouble with Leader Elections (in distributed systems) - Joe Magerramov

To B or not to B: B-Trees with Optimistic Lock Coupling - Philipp Fent | CedarDB

Efficient Filter Pushdown in Parquet - Xiangpeng Hao

The Open Table Format War: Merely a Battle on the Path to Engineering a Truly Open Data Platform - Pauline Brown | Onehouse.ai

Research Paper - LavaStore: ByteDance's Purpose-Built, High-Performance, Cost-Effective Local Storage Engine for Cloud Services | ByteDance

Have any feedback on documentation, content ideas or the project? Drop us a message!